Empathy and sarcasm: How AI can learn to understand people better

About Prof. Dr. Lucie Flek

Prof. Dr. Lucie Flek is a professor in the Department of Mathematics and Computer Science at Philipps University Marburg, where she leads the Conversational AI and Social Analytics (CAISA) Lab.

Prior to her appointment in Marburg, Flek studied computer science at the Faculty of Nuclear Physics in Prague and worked at the Large Hadron Collider in CERN. Her expertise in big data processing then took her to Google, where the scientist turned to machine understanding of language.

From there, it was back to science: she received her PhD in 2016 at TU Darmstadt. Flek was a Visiting Researcher at the University of Pennsylvania, USA, and a Research Fellow at University College London, England.

She then worked for three years as a Research Program Manager in Amazon’s Alexa AI program, then as an Associate Professor of AI in Mainz, Germany. Flek has been in Marburg since 2021.

Research at the interface of psychology and AI

Prof. Dr. Lucie Flek has an impressive resume: she has lived, studied, worked and researched in seven countries, been at tech giants such as Google or Amazon, and combines expertise on nuclear physics, psychology, computer science and artificial intelligence. Now, the scientist researches artificial intelligence, language and social behavior.

“Language is even more fun than physics because it depends on how people behave. It’s often very irrational and not everything follows rules like in physics,” Flek says. That’s why she moved from analyzing big data at the massive CERN particle accelerator to Big Data processing at Google.

Working on language is interdisciplinary, according to Flek: “To really understand what a sentence means, you need additional information about human behavior and the social context of a statement.

Flek left her jobs at Google and Amazon to pursue her passion for why questions in academia. Tech companies offer access to interesting data and plenty of computing power, but there is little time for details and in-depth questions.

In Marburg, Flek is now combining all the knowledge she has absorbed so far in her varied career path. She works at the intersection of machine learning, classical computer science, language, the psychology and sociology of social media, and human-computer interaction.

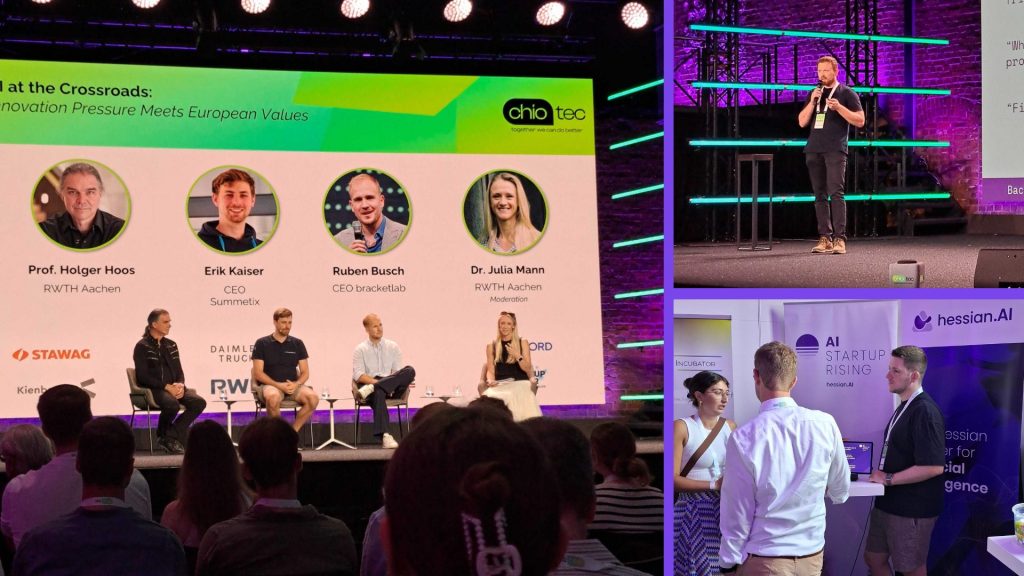

hessian.AI has been a great help to Flek in building a research network and in outreach. For example, one of her post-docs is directly supported by the center. In addition, hessian.AI creates access to industry, where Flek’s AI agents will help improve communication between stakeholders.

When universities need to compete with industry

hessian.AI could also provide computing resources. That’s key because natural language machine processing has changed radically in recent years: Big companies like Google and OpenAI are dominating the increasingly commercial AI industry with their models. Big-tech models are getting bigger almost by the month and increasingly opaque as companies withhold details about training processes.

Flek sees this development as a major challenge, saying that as a university, as a nation, and even as Europe, it is difficult to keep up with the infrastructure of the U.S. industry.

At the same time, she says, it is also the role of science to challenge these large industrial applications. There are numerous ethical issues, such as in what areas large models can be usefully applied and where risks lie. Research needs to ensure that large AI models become better and useful for society.

Empathic computer and sarcasm analysis

In her CAISA lab, the scientist is researching two major topics: Better conversational agents and AI systems that better understand what people write on social media.

Specifically, her team is developing conversational agents, for example, that understand people more empathetically and serve as AI tutors for people giving advice to others. Such systems could help people in teaching, counseling or medicine, for example, better predict student or patient reactions.

Medical students could interact with an AI patient and learn how to talk to sufferers about a serious diagnosis in an emergency.

In social media analysis, Flek focuses on better understanding people, “People don’t always behave the same way. You have to know more about a person, and who they’re talking to, to interpret a sentence correctly.”

The same sentence can mean very different things, making it difficult to evaluate a statement. Context helps here: sarcasm, for example, occurs more often among friends or in political affinity groups.

AI systems that understand us better contribute to more transparent and natural interactions between humans and computers, Flek says. But also between humans and humans.