RAI

Reasonable Artificial Intelligence

About RAI

Over the last decade, deep learning (DL) has led to groundbreaking progress in artificial intelligence, but current DL-based AI systems are unreasonable in many ways:

(1) They are developed and deployed in unreasonable ways, requiring unreasonably large models, data, computations, and infrastructure, ultimately giving a monopoly to a few big companies with the necessary resources;

(2) they cannot reason or easily handle unfamiliar situations and nuanced context, and also lack reasonable common sense and abstraction capabilities;

(3) they neither continuously improve over time, nor do they learn through interactions or can quickly adapt – they need frequent retraining, leading to unreasonable economic and environmental costs. Such unreasonable learning makes them brittle and may even be harmful to society; it needs to be fixed at its core, not just by a brute-force scaling of resources or the application of band-aids.

Within Hessian.AI, we are aiming at a new generation of AI, which we call Reasonable Artificial Intelligence (RAI). This new form of AI will learn in more reasonable ways, with models being trained in a decentralized fashion, continuously improving over time, building an abstract knowledge of the world, and with an innate ability to reason, interact, and adapt to their surroundings. This idea of Reasonable Artificial Intelligence has been proposed as a Cluster of Excellence.

The foundations of the Cluster of Excellence “Reasonable Artificial Intelligence – RAI” (EXC 3057) in the framework of the Excellence Strategy of the German Federal and State Governments are laid in 3AI. 3AI is funded as a cluster project by the Hessian Ministry of Higher Education, Research, Science and the from 2021 to 2025. In addition, there are five LOEWE professorships and two Humboldt professorships which will contribute to the idea of reasonable AI. Hessian.AI which is supported with about 38 Mio. € will provide the compute to execute the research on reasonable AI.

RAI is coordinated by Prof. Kristian Kersting, Prof. Mira Mezini, and Prof. Marcus Rohrbach (all TU Darmstadt).

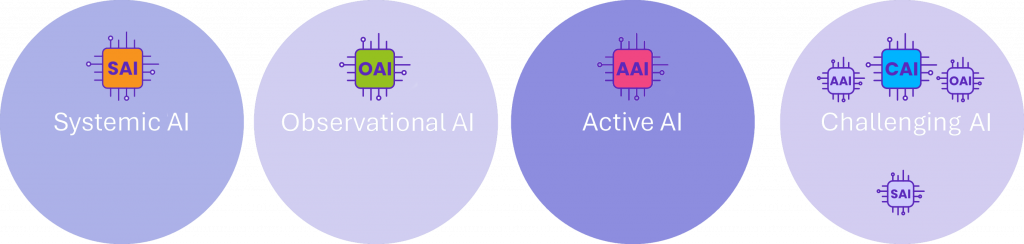

RAI Structure

RAI is structured in four research labs, each tackling an important aspect of RAI: (1) The research lab „Systemic AI“ works on software and systems methods that enable modular, collaborative, decentralized, interactive, and incremental training of RAIs and support their efficient integration into existing large-scale software systems; (2) „Observational AI“ rethinks contextual learning and brings together different AI regimes to inject common-sense knowledge; (3) „Active AI“ focuses on continual and adaptive lifelong learning with active exploration; (4) Finally, „Challenging AI“ develops challenges and benchmarks to monitor and evaluate RAI’s development. The PIs from different disciplines will work together as multidisciplinary teams, crossing boundaries, fostering diversity, and creating a new learning-centered computing paradigm that will change the way we develop and use AI.

Systemic AI

„Systemic AI“ (SAI) aims to develop systemic foundations of RAIs to increase the efficacy and quality of building and embedding RAIs into large-scale software systems. We will raise the level of abstraction akin to how high-level programming historically shifted development efforts away from low-level issues and broadened the class of developers that can build sophisticated software systems. To this end, we will embrace and generalize differentiable programming to multi-paradigm neuro-symbolic programming and map high-level programming abstractions to hybrid AI architectures composed of many AI modules. Crucially, we aim for neuro-symbolic programming foundations that innately support collaborative, decentralized, interactive, and incremental learning; this will not only improve the utility of data with privacy and ownership regulations, but also enable efficient use of computational resources and support the development of AIs that can be easily modified even after deployment. We will also go beyond just programming and embedding of RAIs and rethink and redesign both core building blocks of software stacks (such as databases) and user interfaces so that they can actively adapt their functionality to RAI software and enable RAI to learn from interactions with the outside world.

Observational AI

„Observational AI“ (OAI) rethinks observational AI algorithms from the ground up and lays new algorithmic foundations for the computational and mathematical modelling of contextual learning algorithms. As one ingredient alone is not sufficient for designing RAIs, we will focus on algorithms that are composable from many AI modules comprised of machine learning, optimization, and reasoning; the composed models can, for example, be spatiotemporal, physical, domain-specific, or cognitive. One key challenge is bringing together different AI regimes that are currently separated. For example, low-level perception (e.g., to recognize a road sign) is typically handled by standard machine learning, but high-level reasoning (e.g., to conclude that a road sign showing 120 km/h does not make sense in a village) is handled by using logical and probabilistic representations. It is a challenge to bring such different regimes together; another one is to inject common-sense knowledge into differentiable models. Jointly addressing both will allow RAIs to address observational tasks in open worlds by combining data with knowledge that is contextual and descriptive. Furthermore, we will integrate symbolic-descriptive models, which will be used to build explainable approaches to Observational AI since symbolic explanations enable sharing of knowledge and coordinating tasks with other agents, incl. humans.

Active AI

„Active AI“ (AAI) rethinks lifelong learning and acting based on reinforcement learning agents that evolve in complex (virtual) environments with simulated physics. These agents will use Observational AI for multi-modal perception, as well as planning and learning, and will be driven by knowledge- and competence-based exploration of the world. They will thus become proficient at interacting with the world with less and less external supervision. Ultimately, the agents will be allowed to define their own learning goals and practice how to achieve them. A central question, to be answered in close collaboration with the Systemic AI area, will be how to self-generate abstract representations and concepts from which the agents can derive their learning goals? Our hypothesis is that inductive biases – knowledge and assumptions that the learner uses to predict – will be key to efficiently using “less data”. We envision that endowing “starting algorithms” with these will help to learn invariances, which, in turn, will allow the agent to generalize across environments and tasks. This is likely to result in faster, robust, and scalable continual and reinforcement learning algorithms. We also expect to have novel learning algorithms that require less hand-tuning and that can be adapted more easily to new tasks. Such algorithms can offer insights into the properties of their components, such as state and action representation, rewards, transition models, policies, and value functions.

Challenging AI

„Challenging AI“ (CAI) uses and develops challenges and benchmarks to reveal and quantify limitations of AI approaches and to orient RAI. When implemented and interpreted appropriately, benchmarks enable the broader community to better understand AI technology and influence its trajectory. Consider, e.g., how foundation models have recently advanced AI applications. At the core, they are often based on language models, which are black boxes that take in data and generate, say, natural text, images, or code. Despite their simplicity, after they have been trained on broad data at an immense scale, they can be adapted (e.g., prompted or fine-tuned) to a myriad of downstream scenarios. Since it is neither possible to consider all the scenarios nor all the desiderata that (could) pertain to foundation models, we aim at recognizing the incompleteness of foundation and other AI models to motivate and challenge RAI models. Broadening the evaluation has been a continuing trend, also summarized by the HELM initiative. The results show that a considerable gap exists between AI and human-level learning. We will collect existing challenges driven by insights from cognitive science (e.g., Winograd problems or Kandinsky patterns), on which current AI systems still fail and use them for monitoring as well as generalize them to the multimodal and continual setting. Crucially, we utilize cognitive science to guide and inform the development of more reasonable AI systems.

Spokespersons

“Despite impressive advances, current AI systems have demonstrated some significant weaknesses. Although applications such as ChatGPT can write comprehensive texts on complex subjects, they lack the ability to apply common sense. They are often incapable of understanding multi-layered interrelationships and also require huge amounts of resources. Developing a truly reasonable AI, which minimises risks and works efficiently, still remains a challenge and is something we are addressing with ‘Reasonable AI’ and hessian.AI”. Mira Mezini

“In a world in which technological dependencies are increasingly fuelling geopolitical tension, the development of a sovereign European artificial intelligence is of vital importance. Europe must be able to set its own standards and develop independent, trustworthy technologies so that it can remain resilient both economically and socially. This is where ‘Reasonable AI’ will play a role by developing the next generation of reasonable AI.” Kristian Kersting

“We are convinced that RAI will shape the future of AI in Germany and around the world. New, efficient speech models, such as the recently launched DeepSeek, have demonstrated how quickly and efficiently advances in AI development can be achieved. These models not only open up technological opportunities and possibilities to conserve resources but also highlight the need to develop trustworthy and reasonable AI. Europe has a unique opportunity here to take on a pioneering role by combining innovations with ethical standards, efficiency and transparency.” Marcus Rohrbach

Principal Investigators

-

Angela Yu

-

Anna Rohrbach

-

Carlo d’Eramo

-

Carsten Binnig

-

Constantin Rothkopf

-

Emtiyaz Khan

-

Gemma Roig

-

Georgia Chalvatzaki

-

Heinz Koeppl

-

Hilde Kühne

-

Iryna Gurevych

-

Isabel Valera

-

Jan Gugenheimer

-

Jan Peters

-

Justus Thies

-

Kristian Kersting

-

Marcus Rohrbach

-

Martin Mundt

-

Mira Mezini

-

Simone Schaub-Meyer

-

Stefan Roth

-

Susann Weißheit

Board of Fellows

Science Management and Administration

Leading Institution

Institutions of Participating Researchers

- Technical University of Darmstadt (Binnig, Carsten; Chalvatzaki, Georgia;Gugenheimer, Jan; Gurevych, Iryna; Kersting, Kristian; Khan, Emtiyaz; Koeppl, Heinz, Mezini, Mira; Peters, Jan; Rohrbach, Anna; Rohrbach, Marcus; Roth, Stefan; Rothkopf, Constantin; Schaub-Meyer, Simone; Thies, Justus; Yu, Angela)

- Justus-Maximilians-University Würzburg (D’Eramo, Carlo)

- Goethe-University Frankfurt am Main (Roig, Gemma)

- University of Tübingen (Kuehne, Hilde)

- Saarland University (Valera, Isabel)

- University of Bremen (Mundt, Martin)

Institutional Cooperation Partners